To further describe the problem, I am interested in downloading the weekly performance statistics for Division 1 College Football, graciously made available by the NCAA here. There are three files, one each for the schedule, offensive and defensive statistics. I really want the package to fire on a weekly basis, and get the new stats updating my data mart.

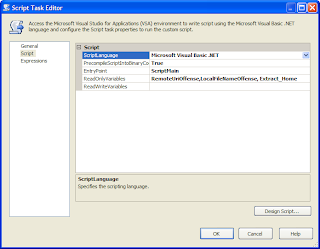

As a brute-force, first-pass effort, I created package variables to represent the remote file uri, the local filename and local path. In the case of the Offensive production statistics, these variables were named RemoteUriOffense, LocalFileNameOffense, and Extract_Home. Unfortunately, one of the downsides of the script task is having to use some variable at the package (or container) level and explicitly declare that variable as being available for read access by the script. An example of that is below:

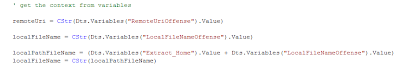

Substituting the variable names declared as readable by the script, in the script code as illustrated below.

While the script task from sqljunkies does get the job done, there are a few disadvantages. First and foremost, creation of the variables for each of the files. I could have done some manipulation of a single set of variables, iterating through for all three files, and using a single script task, firing the task once for each file instance. Additionally, given the variable infrastructure needed for the script task, and the probability this is not the last time I will ever get data via http, this may be a good candidate to create a custom component.

While the script task from sqljunkies does get the job done, there are a few disadvantages. First and foremost, creation of the variables for each of the files. I could have done some manipulation of a single set of variables, iterating through for all three files, and using a single script task, firing the task once for each file instance. Additionally, given the variable infrastructure needed for the script task, and the probability this is not the last time I will ever get data via http, this may be a good candidate to create a custom component.Among the advantages of the custom component, all the logic for file download would be centralized. What happens, in my three-script components when the a change occurs to the download logic (for instance)? Yep, I am replicating the change for each extract. True enough this problem is solved by using a single script task and iterating over a list of files to download.

However, this may just be the perfect task on which to base a first custom component. Stay tuned.